EU AI Act: The First European Regulation on Artificial Intelligence

Introduction

The EU AI Act represents one of the European Union’s most ambitious legislative initiatives to regulate the use of artificial intelligence (AI). Its goal is to ensure the safe, reliable, and ethical development of AI while protecting fundamental EU rights and values, while promoting innovation. In this article, we examine the regulation from a legal perspective and analyze its implications for businesses, governments, and citizens.

What is the EU AI Act?

The new EU AI LEGAL ACT is the world’s first comprehensive regulation aimed at establishing clear rules for the use of artificial intelligence.

European Commission’s Definition of AI:

“Artificial intelligence (AI) refers to systems that exhibit intelligent behavior by analyzing their environment and acting — with some degree of autonomy — to achieve specific objectives.”

This means that AI-based systems can be purely software-based, operating in the virtual world (e.g., voice assistants, image analysis software, search engines, speech and face recognition systems) or AI can be embedded in devices (e.g., advanced robots, autonomous cars, drones, or Internet of Things applications).

Risk Categorization

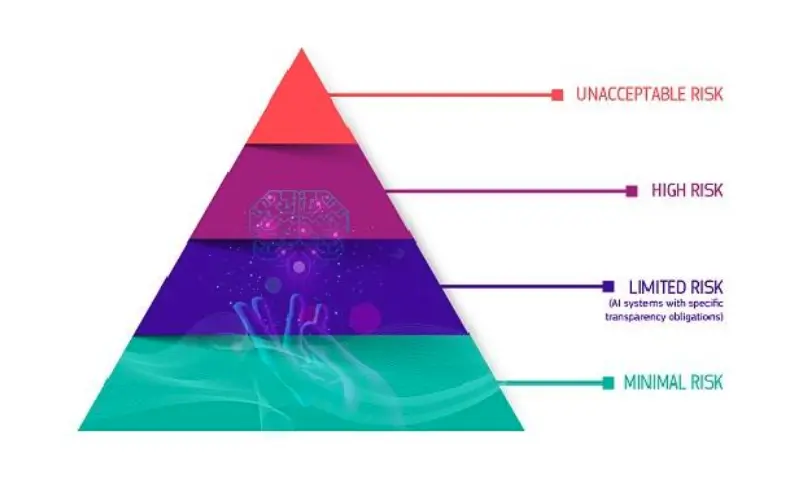

The Regulation is based on a risk-based approach and is divided into:

- Prohibited Applications: AI that exploits individual vulnerabilities or is used for social scoring. For this reason, unacceptable risk is prohibited (e.g., social scoring systems and manipulative AI systems). That is, applications that exceed certain predetermined safety thresholds and can endanger fundamental human rights (Chapter II, Article 5)

- High-Risk Systems: The majority of the Regulation deals with these, e.g., facial recognition systems, automated decisions in employment and healthcare.

- Limited Risk Systems: Applications with lower impacts, such as chatbots. These are subject to lighter transparency obligations, meaning developers and users must ensure end users are aware they are interacting with AI (e.g., chatbots and deepfakes).

- Minimal Risk: Minimal risk is unregulated (including the majority of AI applications currently available in the EU single market, such as AI video games and spam filters - at least in 2021; this is changing with the emergence of generative AI).

The majority of obligations fall on providers (companies) of high-risk AI systems:

- Those intending to place on the market or put into service high-risk AI systems in the EU, regardless of whether they are based in the EU or a third country.

- Also, providers from third countries, provided that the outputs of the high-risk AI system are used in the EU.

Users are natural or legal persons who use an AI system in a professional capacity, not the end users who are affected. Users (system users) of high-risk AI systems have certain obligations, although fewer than providers-companies. This regulation applies to users located in the EU, as well as users from third countries where the outputs of the AI system are used in the EU.

Legal Tech Applications

Applications used for legal purposes (legal decision-making or judicial decision support) are typically classified as high-risk. This is because they can directly affect citizens’ fundamental rights, such as fair trial and access to justice.

In fact, high-risk applications are subject to strict requirements, such as: transparency and explainability, risk assessment and compliance before release, continuous monitoring of performance and impacts, ensuring impartiality and minimizing discrimination. Especially in the legal sector, system reliability and accountability are crucial to avoid negatively influencing human decision-making. Most legal technology solutions used by companies, lawyers, and end users (seeking answers to their legal questions) will fall into this category, e.g., legal chatbots, document automation tools (based on interactive questionnaires), and Legal Tech tools for decision automation.

However, AI systems used outside the areas mentioned above and not intended for interaction with natural persons, e.g., fully automated legal technology solutions outside high-risk areas that don’t require human input, will not need to comply with additional obligations.

The AI Act will also apply to legal technology providers, even those established outside the EU but serving EU users. For this reason, companies and law firms worldwide using Legal Tech may be required to comply with certain obligations of the AI Act. This will be relevant for law firms that, for example, use a white-label Legal Tech solution that is a high-risk AI system and market it (modified or not) under their brand name.

Conclusions

The EU AI Act creates a stable legal environment that promotes responsible innovation while protecting citizens’ fundamental rights.